we’ve lately felt as if we’re spending more time troubleshooting our library’s licensed e-resources. saying “i feel like i’m spending a lot of time on this” doesn’t really cut it when it comes time to staff a task appropriately so we’re making an attempt to quantify our process. knowing how much time we’re spending will tell us if we’ve got enough people tasked with the responsibility of responding to patron problem reports, since providing timely customer service is part of our mission. enter: gimlet. gimlet is usually used to count reference desk transactions so you can see how it can easily be adapted to count other kinds of service point interactions. for example, the reference department gets emails requesting assistance for troubleshooting a research question, my department gets emails requesting assistance for troubleshooting e-resource access. since our reference department already uses gimlet, we thought we’d give it a try too.

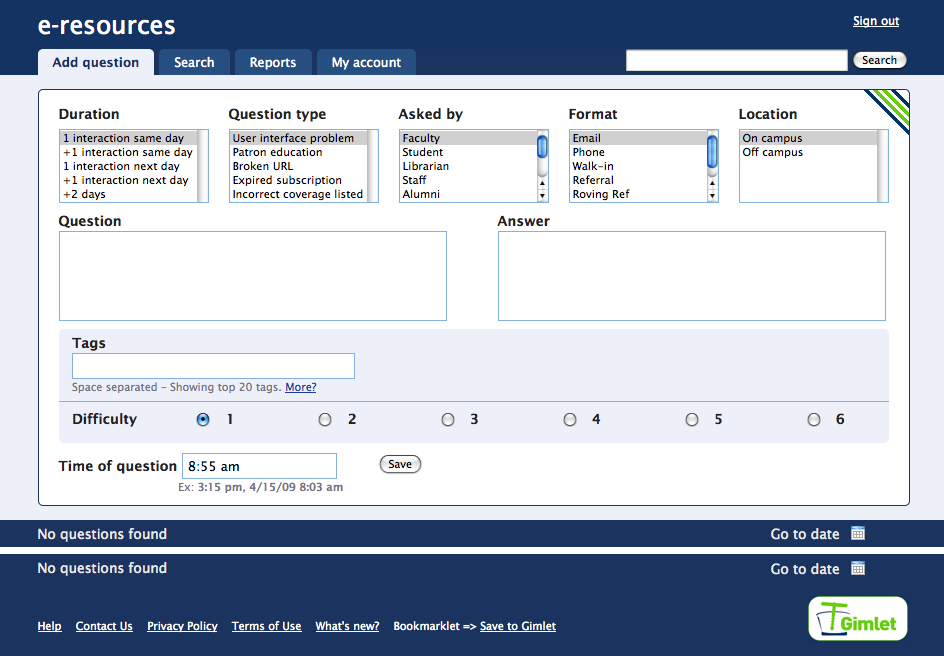

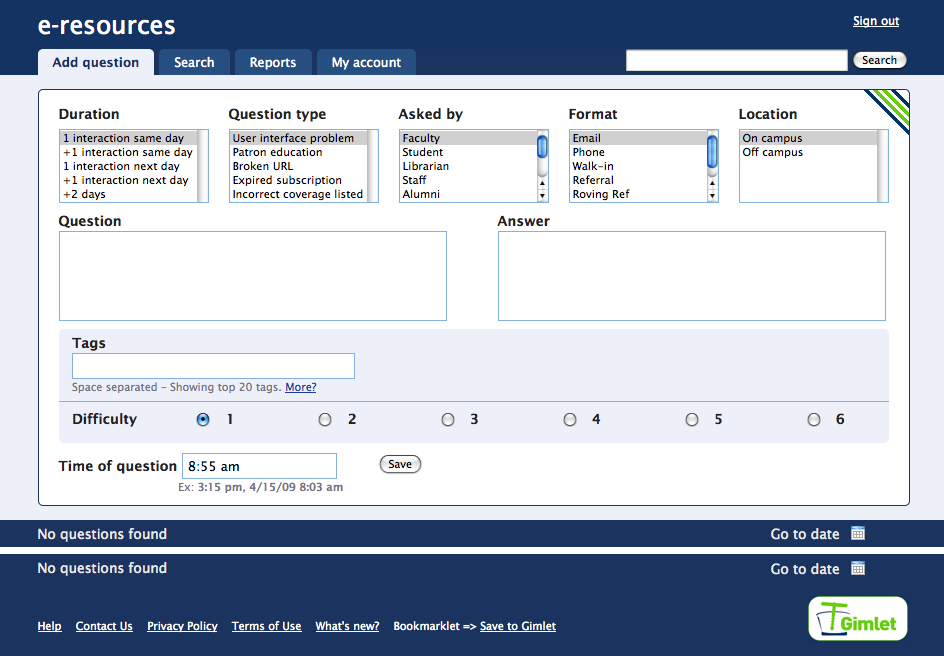

setting it up was super easy. there are five categories built in to the system, and you can define the variables that fall under those headings. the five categories are: duration; question type; asked by; format; location. under the heading of duration we added the variables: 1 interaction, same day; more than one interaction, same day; 1 interaction, next day; more than one interaction, next day; more than two days. tracking how long it takes to resolve a problem, and how many interactions with the patron it takes to get the information we need, will help us to understand the complexity of the problems we’re troubleshooting. often we can get what we need from the patron with one interaction and get the problem resolved in one day, but sometimes it can drag on for weeks. being able to count how often we can quickly respond and how often the problems lag will be instrumental for not only identifying our own staffing needs but can also point to how we evaluate timely vendor responses. if we’re waiting on a vendor to fix something and they consistently take more than two days, then we may choose not to work with that vendor in the future.

here are the other categories and the variables we’ve entered. question type: user interface problem; patron education; broken URL; expired subscription; incorrect coverage listed. asked by: faculty; student; librarian; staff; alumni; law school; visitor; other. format: email; phone; walk-in; referral; roving ref; text chat. location: on campus; off campus.

we’ll be adding tags for the names of resources that are reported as problematic so we can get an idea of how many times an ebsco database goes wonky, for example.

we’ll be experimenting using the READ scale (shown as Difficulty in the screen shot) to help us assess the complexity of the questions we’re asked. stay tuned for more on that later.

you can see from the screen shot that our database is a blank slate, ready to have questions asked and responded. i’ll report back later to let you know how it’s going! in the meantime, if you use gimlet to track the troubleshooting of e-resources i’d love to hear from you.

![]()